It’s a war that’s been raging for several years. And while it may lack the sound and the fury of physical combat, it has harmful and dramatic implications for nations, their businesses and their citizens. The information war is going on all around us, destabilising our democracies. In this silent conflict, artificial intelligence has become a highly effective tool for spreading disinformation and deception, but also, more crucially, for combatting deepfakes, fake news and other forms of manipulation. This is our analysis of the AI war.

A cyber war for influence in the age of artificial intelligence

The sophisticated weapons of deepfakes and fake news have emerged as instruments of choice in conflicts, both armed and unarmed, and are being used to destabilise states and social groups alike, and to tarnish businesses’ reputations. The increasingly widespread use of these tactics is a major cause for concern, especially during elections. A recent survey points to high levels of concern among the French population, with 77% of respondents saying they felt the spread of fake news had a considerable impact on the democratic functioning of society and 72% saying they were worried about the influence on voting of disinformation shared on social media. Furthermore, over half of those surveyed (55%) feared that potential disinformation campaigns could lead to the results of European elections being challenged.

In 2021, 51% of internet users in France said they had seen at least one news item they considered either fake or unreliable (i.e. fake news) on news sites or social media over the previous three months. As far back as 2019, a survey published by Visibrain showed that there had been a huge increase in the amount of fake news shared on social media: between 2016 and 2017, the volume of tweets that included or referred to fake news doubled in France and quintupled globally; and in 2019, there were 45.5 million tweets worldwide sharing or commenting on fake news, 1.7 million of them in France alone.In 2023, monitoring organisation News Guard said it had catalogued nearly 800 websites created that same year that published nothing but fake news stories created by generative AI.

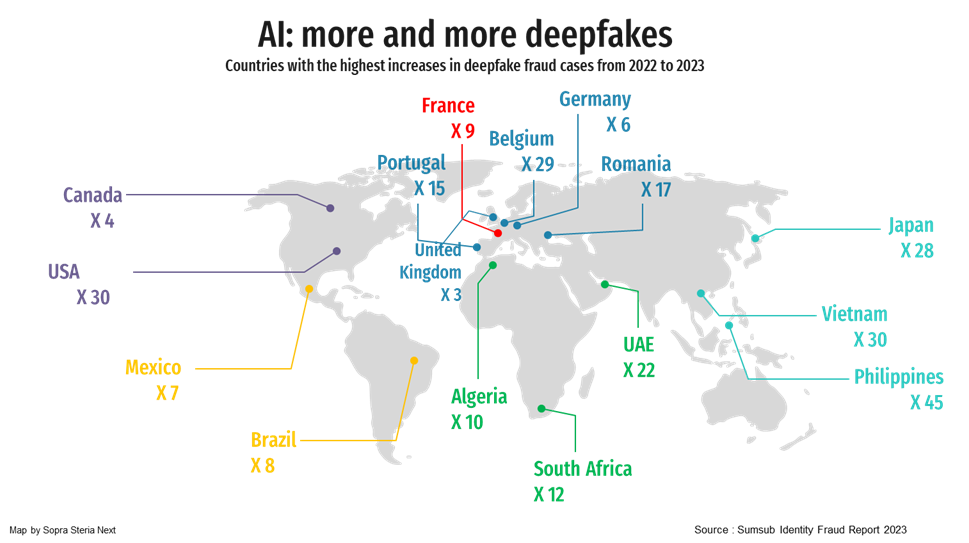

Europe is also a huge target for AI-generated deepfakes, with the number of deepfakes created for the purposes of identity fraud increasing almost eightfold between 2022 and 2023.

In 2023, monitoring organisation NewsGuard said it had catalogued nearly 800 websites created that same year that published nothing but fake news stories created by generative AI.

Europe is also a huge target for AI-generated deepfakes, with the number of deepfakes created for the purposes of identity fraud increasing almost eightfold between 2022 and 2023.

In a particularly tense environment due to the war in Ukraine, conflict in the Middle East and elections in both Europe and the United States this year, this steadily increasing phenomenon is becoming more and more worrying, especially with advances in new technologies, most notably generative artificial intelligence.

Since it burst onto the scene in 2022, generative artificial intelligence – which can produce text, images and video – has become omnipresent and widely accessible. Large Language Models (LLMs) optimised for chat can generate all sorts of fake content. Generative AIs can wage disinformation campaigns by posting to accounts on social networks such as Facebook, X (formerly Twitter) and Instagram. Fake news can be generated with the help of transformers like GPT-3 and Generative Adversarial Networks (GANs) to produce highly realistic images.

Moreover, the French Ministry of the Interior has emphasised that “foreign interference operations aimed at destabilising states are becoming increasingly common and represent a genuine threat to democracies.”

Developing effective responses to this kind of “informational insecurity” is a complex business, but it will no doubt rely on the burgeoning combination of artificial intelligence and human analysis to anticipate, detect and identify threats, then respond and counterattack.

Harnessing AI to map and analyse disinformation channels and messages

Understanding how social networks are structured and how content is sourced and spread across them used to be a difficult – if not impossible – task. The advent of artificial intelligence means there are now powerful tools available to anticipate and combat these threats as soon as they emerge.

Content that is posted and shared can be analysed using natural language processing (NLP). Various classification tools, such as decision forests and LSTM neural networks, facilitate machine translation. Similarly, graph technology harnesses the potential of artificial intelligence to analyse relationships between data points. Meanwhile, machine learning systems can create tools for detecting images that have been manipulated or tampered with, for example by looking for traces left by systems used to capture images altered by retouching algorithms.

AIs can also be trained to detect disinformation on social media and in message groups, and to issue warnings. By analysing blocks of data sourced from exchanges on networks like Twitter and Telegram, AIs can recognise typical stylistic elements of fake news. They can also be trained to identify potentially problematic content and help operators understand why it has been flagged up. With regard to the impact of technology on jobs, experience and training are key, and supplement the work done by artificial intelligence, which then acts as a “co-pilot” alongside human agents.

Lastly, further downstream, the European Union’s new AI Act is designed to restrict deepfake activity by requiring those who use AI systems to generate or manipulate content (whether images, audio or video) to indicate whether that content has been artificially created or modified. However, legislation alone cannot fully shield us against the many disinformation campaigns run by our competitors, which is why the fight against disinformation must be waged using the same “weapons” as our adversaries – AI-powered technologies – and be underpinned by strong values that reinforce national sovereignty.

Strong values that reinforce national digital sovereignty

As recommended in the latest OECD report, published on 4 March 2024, a national strategy needs to be drawn up to ensure efforts are more effectively coordinated. It is also vital that a system be put in place to monitor disinformation on social media and keep citizens informed about ongoing attempts at interference. Another crucial step is helping people develop critical thinking to make them more resilient to attempts at manipulation (which could involve, for example, awareness-raising in schools and the development of appropriate tools).

While it is also essential to develop innovative tools, they must comply with the law and associated ethical standards (since, after all, that’s what differentiates us from our adversaries). These tools must also facilitate synergies between human and artificial intelligence to distinguish fact from fake news and spot deepfakes.

It is also important to develop an environment of trust between stakeholders (researchers, industry and politicians) and an ecosystem of excellence based on cooperation between the state, startups, major corporations, digital services firms and members of the European Defence Technological and Industrial Base (EDTIB).

Lastly, AI solutions must be sovereign to enable Europe to play a leading role at the global level. In an increasingly unstable world in which competitors will stop at nothing, artificial intelligence combined with in-depth human analysis will continue to play a major role in combatting disinformation and thereby protecting our democracies. The EU must therefore seek technological sovereignty and autonomy – i.e. freedom from reliance on foreign digital technologies – by training large numbers of engineers, attracting top talent to our businesses and encouraging basic research and the development of secure, effective industrial ecosystems.

[1] The survey was conducted by Ipsos on behalf of Sopra Steria. A sample group of 1,000 people representing the French population (by sex, age, profession, region and type of urban area) was surveyed between 21 and 23 February 2024. Respondents were questioned online using Ipsos’s Online Access Panel. See La population française vulnérable à la désinformation (soprasteria.fr).

[2] https://www.insee.fr/fr/statistiques/6475020#figure1

[3] Fake News : comment se protéger ? (visibrain.com)

[4] https://www.lopinion.fr/economie/les-entreprises-futures-proies-des-fake-news

[5] https://www.newsguardtech.com/special-reports/ai-tracking-center/

[6] The use of deepfakes to commit identify fraud shot up in 2023: Latvia saw the highest number of cases in Europe, with nearly 76,100 people affected out of a population of 1.8 million, while Malta was the least affected, with 3,700 people exposed out of a total population of 531,113. France ranked eighth, with one million people affected out of a population of nearly 68 million. Source: “Sumsub Identity Fraud Report – A comprehensive, data-driven report on identity fraud dynamics and innovative prevention methods,” 2023, p. 55.

[7] La lutte contre la manipulation de l’information | Direction Générale de la Sécurité Intérieure (interieur.gouv.fr)

[8] Long short-term memory, which can be used to recognise handwritten text. LSTM systems can analyse and distinguish handwriting models so as to be able correctly identify text. This means they can be used, for example, to enable word processing programs to handle handwritten text.

[9] Solution adopted by the German Foreign Office.

[10] The principles of proportionality and “do no harm”; safety and security; the right to privacy and data protection; adaptive, multi-stakeholder governance and collaboration; responsibility and accountability; transparency and explicability; monitoring and human decision-making; sustainability; awareness and education; and equity and non-discrimination.

Authors:

Julien Rouxel, Space, Defence & Security Partner, Sopra Steria Next

Bruno Courtois, Defence & Cyber Advisor, Sopra Steria Defence & Security

Boris Laurent, Consulting Manager, Sopra Steria Next